AI crawlers like GPTBot and ClaudeBot are overwhelming websites with aggressive traffic spikes—one user reported 30TB of bandwidth consumed in a month. These bots strain shared hosting environments, causing slowdowns that hurt SEO and user experience. Unlike traditional search crawlers, AI bots request large batches of pages in short bursts without following bandwidth-saving guidelines. Dedicated…

No, you’re not imagining things.

If you’ve recently checked your server logs or analytics dashboard and spotted strange user agents like GPTBot or ClaudeBot, you’re seeing the impact of a new wave of visitors: AI and LLM crawlers.

These bots are part of large-scale efforts by AI companies to train and refine their large language models. Unlike traditional search engine crawlers that index content methodically, AI crawlers operate a bit more… aggressively.

To put it into perspective, OpenAI’s GPTBot generated 569 million requests in a single month on Vercel’s network. For websites on shared hosting plans, that kind of automated traffic can cause real performance headaches.

This article addresses the #1 question from hosting and sysadmin forums: “Why is my site suddenly slow or using so much bandwidth without more real users?” You’ll also learn how switching to a dedicated server can give you back the control, stability, and speed you need.

Understanding AI and LLM Crawlers and Their Impact

What are AI Crawlers?

AI crawlers, also known as LLM crawlers, are automated bots designed to extract large volumes of content from websites to feed artificial intelligence systems.

These crawlers are operated by major tech companies and research groups working on generative AI tools. The most active and recognizable AI crawlers include:

GPTBot (OpenAI)

ClaudeBot (Anthropic)

PerplexityBot (Perplexity AI)

Google-Extended (Google)

Amazonbot (Amazon)

CCBot (Common Crawl)

Yeti (Naver’s AI crawler)

Bytespider (Bytedance, TikTok’s parent company)

New crawlers are emerging frequently as more companies enter the LLM space. This rapid growth has introduced a new category of traffic that behaves differently from conventional web bots.

How AI Crawlers Differ from Traditional Search Bots

Traditional bots like Googlebot or Bingbot crawl websites in an orderly, rules-abiding fashion. They index your content to display in search results and typically throttle requests to avoid overwhelming your server.

AI crawlers, as we pointed out earlier, are much more aggressive. They:

Request large batches of pages in short bursts

Disregard crawl delays or bandwidth-saving guidelines

Extract full page text and sometimes attempt to follow dynamic links or scripts

Operate at scale, often scanning thousands of websites in a single crawl cycle

One Reddit user reported that GPTBot alone consumed 30TB of bandwidth data from their site in just one month, without any clear business benefit to the site owner.

Image credit: Reddit user, Isocrates Noviomagi

Incidents like this are becoming more common, especially among websites with rich textual content like blogs, documentation pages, or forums.

If your bandwidth usage is increasing but human traffic isn’t, AI crawlers may be to blame.

Why Shared Hosting Environments Struggle

When you’re on a shared server, your site performance isn’t just affected by your visitors—it’s also influenced by what everyone else on the server is dealing with. And lately, what they’re all dealing with is a silent surge in “fake” traffic that eats up CPU, memory, and runs up your bandwidth bill in the background.

This sets the stage for a bigger discussion: how can website owners protect performance in the face of rising AI traffic?

The Hidden Costs of AI Crawler Traffic on Shared Hosting

Shared hosting is perfect if your priority is affordability and ease, but it comes with trade-offs.

When multiple websites reside on the same server, they share finite resources like CPU, RAM, bandwidth, and disk I/O. This setup works well when traffic stays predictable, but AI crawlers don’t play by those rules. Instead, they tend to generate intense and sudden spikes in traffic.

A recurring issue in shared hosting is what’s called “noisy neighbor syndrome.” One site experiencing high traffic or resource consumption ends up affecting everyone else. In the case of AI crawlers, it only takes one site to attract attention from these bots to destabilize performance across the server.

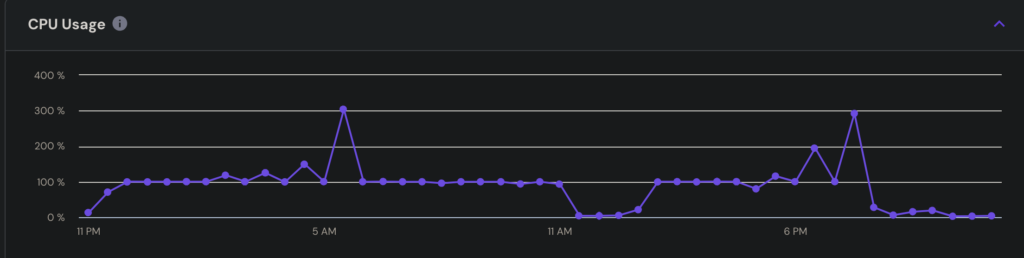

This isn’t theoretical. System administrators have reported CPU usage spiking to 300% during peak crawler activity, even on optimized servers.

Image source: Github user, ‘galacoder’

On shared infrastructure, these spikes can lead to throttling, temporary outages, or delayed page loads for every customer hosted on that server.

And, because this traffic is machine-generated, it doesn’t convert, it doesn’t engage; and in online advertising terms, it is marked GIVT (General Invalid Traffic).

And if performance issues aren’t enough, since AI crawler traffic affects site speed, it invariably affects your technical SEO.

Google has made it clear: slow-loading pages hurt your rankings. Core Web Vitals like Largest Contentful Paint (LCP) and Time to First Byte (TTFB) are now direct ranking signals. If crawler traffic delays your load times, it can chip away at your visibility in organic search, costing you clicks, customers, and conversions.

And since many of these crawlers provide no SEO benefit in return, their impact can feel like a double loss: degraded performance and no upside.

Dedicated Servers: Your Shield Against AI Crawler Overload

Unlike shared hosting, dedicated servers isolate your site’s resources, meaning no neighbors, no competition for bandwidth, and no slowdown from someone else’s traffic.

A dedicated server gives you the keys to your infrastructure. That means you can:

Adjust server-level caching policies

Fine-tune firewall rules and access control lists

Implement custom scripts for traffic shaping or bot mitigation

Set up advanced logging and alerting to catch crawler surges in real time

This level of control isn’t available on shared hosting or even most VPS environments. When AI bots spike resource usage, being able to proactively defend your stack is necessary. With dedicated infrastructure, you can absorb traffic spikes without losing performance. Your backend systems—checkout pages, forms, login flows—continue to function as expected, even under load.

That kind of reliability translates directly to customer trust. When every click counts, every second saved matters.

Dedicated Hosting Pays for Itself

It’s true: dedicated hosting costs more up front than shared or VPS plans. But when you account for the hidden costs of crawler-related slowdowns—lost traffic, SEO drops, support tickets, and missed conversions—the equation starts to shift.

A dedicated server doesn’t just eliminate the symptoms; it removes the root cause. For websites generating revenue or handling sensitive interactions, the stability and control it offers often pays for itself within months.

Controlling AI Crawlers With Robots.txt and LLMS.txt

If your site is experiencing unexpected slowdowns or resource drain, limiting bot access may be one of the most effective ways to restore stability, without compromising your user experience.

Robots.txt Still Matters

Most AI crawlers from major providers like OpenAI and Anthropic now respect robots.txt directives. By setting clear disallow rules in this file, you can instruct compliant bots not to crawl your site.

It’s a lightweight way to reduce unwanted traffic without needing to install firewalls or write custom scripts. And many companies already use it for managing SEO crawlers, so extending it to AI bots is a natural next step.

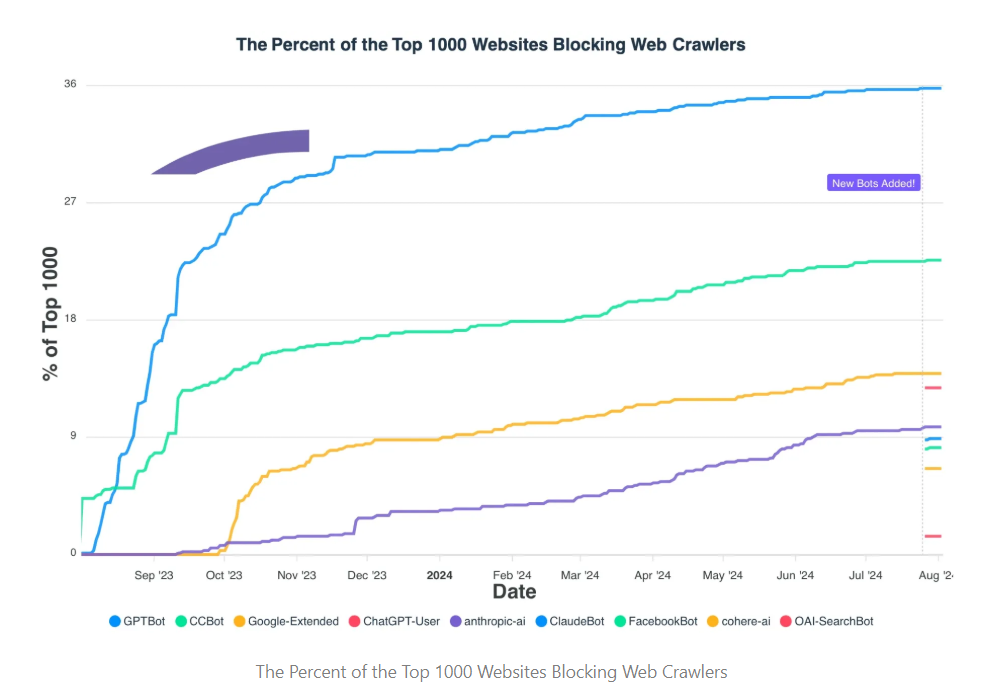

By August 2024, over 35% of the top 1000 websites in the world had blocked GPTBot using robots.txt. This is a sign that site owners are taking back control over how their content is accessed.

Image source: PPC LAND

A New File for a New Challenge: LLMS.txt

In addition to robots.txt, a newer standard called llms.txt is starting to gain attention. While still in its early adoption phase, it gives site owners another option to define how (or whether) their content can be used in large language model training.

Unlike robots.txt, which is focused on crawl behavior, llms.txt helps clarify permissions related specifically to AI data usage. It’s a subtle but important shift as AI development increasingly intersects with web publishing.

Using both files together gives you a fuller toolkit for managing crawler traffic, especially as new bots appear and training models evolve.

Below is a feature-by-feature comparison of robots.txt and llms.txt:

Choose the Right Strategy for Your Business

Not every website needs to block AI bots entirely. For some, increased visibility in AI-generated answers could be beneficial. For others—especially those concerned with content ownership, brand voice, or server load—limiting or fully blocking AI crawlers may be the smarter move.

If you’re unsure, start by reviewing your server logs or analytics platform to see which bots are visiting and how frequently. From there, you can adjust your approach based on performance impact and business goals.

Technical Strategies That Require Dedicated Server Access

Dedicated servers unlock the technical flexibility needed to not just respond to crawler activity, but get ahead of it.

Implementing Rate Limits

One of the most effective ways to control server load is by rate-limiting bot traffic. This involves setting limits on how many requests can be made in a given timeframe, which protects your site from being overwhelmed by sudden spikes.

But to do this properly, you need server-level access, and that’s not something shared environments typically provide. On a dedicated server, rate limiting can be customized to suit your business model, user base, and bot behavior patterns.

Blocking and Filtering by IP

Another powerful tool is IP filtering. You can allow or deny traffic from specific IP ranges known to be associated with aggressive bots. With advanced firewall rules, you can segment traffic, limit access to sensitive parts of your site, or even redirect unwanted bots elsewhere.

Again, this level of filtering depends on having full control of your hosting environment—something shared hosting can’t offer.

Smarter Caching for Smarter Bots

Most AI crawlers request the same high-value pages repeatedly. With a dedicated server, you can set up caching rules specifically designed to handle bot traffic. That might mean serving static versions of your most-requested pages or creating separate caching logic for known user agents.

This reduces load on your dynamic backend and keeps your site fast for real users.

Load Balancing and Scaling

When crawler traffic surges, load balancing ensures traffic is distributed evenly across your infrastructure. This kind of solution is only available through dedicated or cloud-based setups. It’s essential for businesses that can’t afford downtime or delays—especially during peak hours or product launches.

If your hosting plan can’t scale on demand, you’re not protected against sudden bursts of traffic. Dedicated infrastructure gives you that peace of mind.

Future-Proofing Your Website With Scalable Infrastructure

AI crawler traffic isn’t a passing trend. It’s growing, and fast. As more companies release LLM-powered tools, the demand for training data will keep increasing. This means more crawlers, more requests, and more strain on your infrastructure.

Image source: Sam Achek on Medium

Developers and IT teams are already planning for this shift. In more than 60 forum discussions, one question keeps showing up:“How should we adapt our infrastructure in light of AI?”

The answer often comes down to one word: flexibility.

Dedicated Servers Give You Room to Grow

Unlike shared hosting, dedicated servers aren’t limited by rigid configurations or traffic ceilings. You control the environment. That means you can test new bot mitigation strategies, introduce more advanced caching layers, and scale your performance infrastructure without needing to migrate platforms.

If an AI crawler’s behavior changes next quarter, your server setup can adapt immediately.

Scaling Beyond Shared Hosting Limits

With shared hosting, you’re limited by the needs of the lowest common denominator. You can’t expand RAM, add additional CPUs, or configure load balancers to absorb traffic surges. That makes scaling painful and often disruptive.

Dedicated servers, on the other hand, give you access to scaling options that grow with your business. Whether that means adding more resources, integrating content delivery networks, or splitting traffic between machines, the infrastructure can grow when you need it to.

Think Long-Term

AI traffic isn’t just a technical challenge. It’s a business one. Every slowdown, timeout, or missed visitor has a cost. Investing in scalable infrastructure today helps you avoid performance issues tomorrow.

A strong hosting foundation lets you evolve with technology instead of reacting to it. And when the next wave of AI tools hits, you’ll be ready.

SEO Implications of AI Crawler Management

“Will blocking bots hurt your rankings?” This question has been asked over 120 times in discussions across Reddit, WebmasterWorld, and niche marketing forums:

At InMotion Hosting, our short answer? Not necessarily.

AI crawlers like GPTBot and ClaudeBot are not the same as Googlebot. They don’t influence your search rankings. They’re not indexing your pages for visibility. Instead, they’re gathering data to train AI models.

Blocking them won’t remove your content from Google Search. But it can improve performance, especially if those bots are slowing your site down.

Focus on Speed, Not Just Visibility

Google has confirmed that site speed plays a role in search performance. If your pages take too long to load, your rankings can drop. This holds regardless of whether the slowdown comes from human traffic, server issues, or AI bots.

Heavy crawler traffic can push your response times past acceptable limits. That affects your Core Web Vitals scores. And those scores are now key signals in Google’s ranking algorithm.

Image source: Google PageSpeed Insights

If your server is busy responding to AI crawlers, your real users—and Googlebot—might be left waiting.

Balance Is Key

You don’t have to choose between visibility and performance. Tools like robots.txt let you allow search bots while limiting or blocking AI crawlers that don’t add value.

Start by reviewing your traffic. If AI bots are causing slowdowns or errors, take action. Improving site speed helps both your users and your SEO.

Migrating From Shared Hosting to a Dedicated Server: The Process

What does it take to make the shift from shared hosting to a dedicated server? Generally, this is what the process involves:

Running a performance benchmark on the current shared environment

Scheduling the migration during off-peak hours to avoid customer impact

Copying site files, databases, and SSL certificates to the new server

Updating DNS settings and testing the new environment

Blocking AI crawlers via robots.txt and fine-tuning server-level caching

Of course, with InMotion Hosting’s best-in-class support team, all this is no hassle at all.

Conclusion

AI crawler traffic isn’t slowing down.

Dedicated hosting offers a reliable solution for businesses experiencing unexplained slowdowns, rising server costs, or performance issues tied to automated traffic. It gives you full control over server resources, bot management, and infrastructure scalability.

If you’re unsure whether your current hosting can keep up, review your server logs. Look for spikes in bandwidth usage, unexplained slowdowns, or unfamiliar user agents. If those signs are present, it may be time to upgrade.

Protect your site speed from AI crawler traffic with a dedicated server solution that gives you the power and control to manage bots without sacrificing performance.